Vera2, AI and Technology

Minimax M2.1

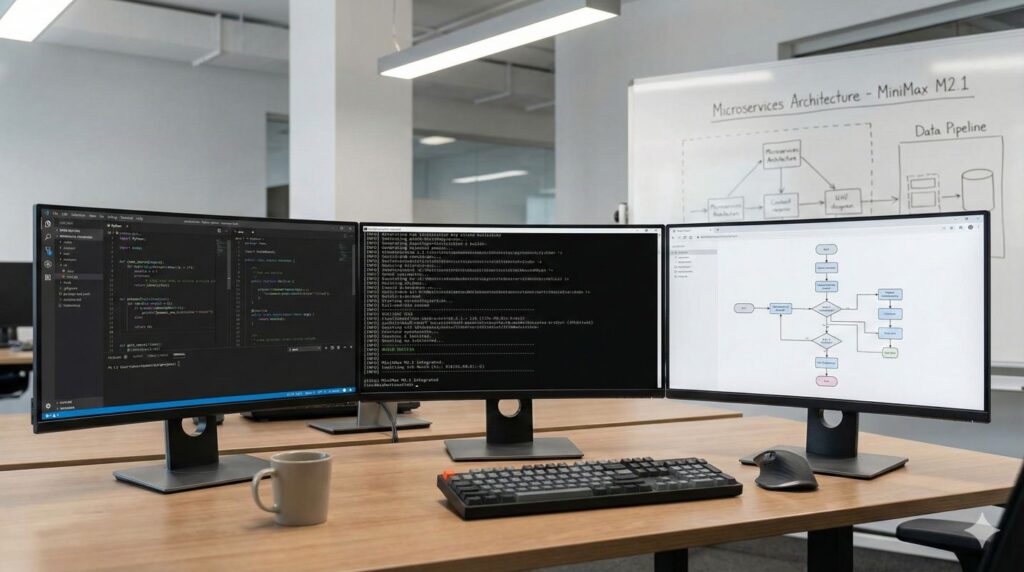

MiniMax M2.1: A New Era for Complex Programming Tasks

MiniMax M2.1 is a new large language model positioned specifically for real-world software work where developers don’t live in a single language, a single framework, or a single repo. The pitch is straightforward: stronger multi-language programming, better refactoring, and better long-horizon “agentic” workflows that involve tool use and iterative planning rather than one-shot code dumps. MiniMax M2.1 is being offered through MiniMax’s platform and is also appearing across common ecosystem touchpoints developers use to evaluate models, including model cards and third-party routing providers. MiniMax API Docs+2MiniMax+2

What makes this release notable is not the generic claim that it “codes well.” The emphasis is on complex programming tasks: multi-file changes, multi-step debugging, web and mobile build targets, and cross-language refactors that require understanding different idioms and runtime constraints. MiniMax’s own materials describe M2.1 as a Mixture-of-Experts-style model with 230B total parameters and 10B activated per inference, optimized for code generation and refactoring, and marketed as “polyglot” for multi-language work. MiniMax API Docs

This article explains what MiniMax M2.1 is, what’s been publicly claimed about its architecture and benchmarks, how it fits into coding workflows, and what to watch if you are considering it for production usage.

Overview of MiniMax M2.1

MiniMax M2.1 is presented as a developer-oriented model designed for complex programming tasks, especially when a project spans multiple languages and requires consistent reasoning across longer sessions. The company’s announcement frames it as “significantly enhanced” for multi-language programming and “built for real-world complex tasks,” which is a direct attempt to compete on practical engineering value rather than only academic benchmarks. MiniMax

MiniMax’s platform documentation lists MiniMax-M2.1 with “230B total parameters with 10B activated per inference,” and describes it as optimized for code generation and refactoring with polyglot code capability and enhanced reasoning. MiniMax API Docs This matters because it implies an efficiency goal: high capability with lower active compute per token than dense models, a tradeoff that can show up as better latency and cost efficiency for coding assistants and agent-style tools.

MiniMax has also published guidance specifically titled for “AI Coding Tools,” emphasizing multi-turn code understanding and reasoning for coding workflows. MiniMax API Docs That focus is consistent with how many teams actually use these models today: as code reviewers, refactoring copilots, test writers, and glue-code generators that sit inside an agent loop.

Where MiniMax M2.1 is available

MiniMax M2.1 is accessible through MiniMax’s own platform model catalog, and is also listed via third-party routing providers that publish context length and pricing metadata. MiniMax API Docs+1 It also appears in open model distribution contexts such as Hugging Face model pages, which can matter for evaluation and reproducibility. Hugging Face

Key Features and Capabilities

Multi-language programming emphasis

The defining claim of MiniMax M2.1 is stronger performance across multiple programming languages, not just Python-heavy tasks. That matters because real systems often combine TypeScript or JavaScript on the front end, a different backend language, infrastructure-as-code, and sometimes mobile clients. MiniMax’s own positioning is explicit that M2.1 is built for multi-language programming and complex programming tasks rather than narrow code-gen demos. MiniMax+1

In practice, the “multi-language programming” pitch only matters if the model maintains consistency when switching contexts: translating a data model from one language to another, updating API contracts across services, and refactoring shared logic without breaking builds. Those are the work patterns that separate toy coding from production coding.

Code generation plus refactoring, not just greenfield code

MiniMax’s model description highlights refactoring as a first-class use case. MiniMax API Docs That’s significant because refactoring is where models tend to fail in subtle ways: they may compile locally, but break integration boundaries, remove necessary side effects, or ignore performance constraints that were implicit in the original code. A model marketed for refactoring is effectively claiming it can handle “change work” rather than only “new code.”

If MiniMax M2.1 performs as claimed, it should be useful for tasks like tightening error handling without changing external behavior, rewriting modules for clarity while preserving interfaces, and making incremental changes across multiple files with correct imports and type constraints.

Agentic workflow support and tool use

MiniMax’s documentation describes M2.1 as supporting function calling and “interleaved thinking,” meaning the model can reason between tool interactions to decide the next action in a multi-step workflow. MiniMax API Docs+1 In practical terms, that design targets agent patterns: run tests, read logs, search a repository, patch code, re-run checks, and keep going until the objective is met.

MiniMax also maintains a reference “Mini Agent” project that describes best practices for building agents with M2.1 using an API approach positioned as compatible with Anthropic-style interfaces. GitHub That kind of reference implementation matters because most failures in agentic coding aren’t raw model failures. They’re orchestration failures: context management, tool schema design, guardrails, and output validation.

Benchmarks and What They Actually Mean

MiniMax and third-party reporting have highlighted benchmark performance designed to reflect real application execution rather than purely static coding questions. TechRepublic reported that MiniMax introduced a benchmark called VIBE and reported an 88.6 average score for M2.1, with particularly strong results in VIBE-Web and VIBE-Android. TechRepublic The Hugging Face model page also references strong VIBE performance, including the same aggregate figure. Hugging Face

Benchmarks like this matter if they actually capture what teams care about: does the generated app run, does the UI behave, do the dependencies resolve, do build steps work, and does the result match requirements? “Execution-aware” evaluation is closer to real engineering than a code snippet that passes a superficial unit test.

Still, treat any benchmark as one signal, not proof. For complex programming tasks, the real differentiators are consistency, error recovery, and adherence to constraints over long sessions.

How MiniMax M2.1 Fits Real Developer Workflows

Coding copilots and review assistants

In day-to-day use, MiniMax M2.1 is positioned to act like a high-end copilot: propose code, explain tradeoffs, and help write tests and refactors. The key question is whether it can do so reliably across multi-language programming environments, where “correct” often means “consistent with the existing codebase,” not “valid in isolation.”

Refactoring and modernization

The model’s explicit refactoring positioning suggests a target use case of modernizing legacy code: migrating patterns, improving structure, and tightening reliability without breaking public APIs. For teams under deadline pressure, this is where an LLM can create huge leverage if it respects constraints and minimizes unintended side effects.

Agent loops for complex programming tasks

The most ambitious usage is an agent loop that runs tools, edits files, and iterates toward a goal. MiniMax’s documentation on interleaved tool use is explicitly aimed at these long-horizon tasks. MiniMax API Docs If you’re building such a workflow, you should still treat the model as probabilistic: enforce strict validation, run tests, gate merges, and use smaller scopes per iteration.

Practical Caveats and What to Watch

MiniMax M2.1 may be strong, but production use still demands discipline. Complex programming tasks tend to fail at boundaries: authentication, concurrency, data migrations, and performance regressions. Multi-language programming can also mask subtle “near-correct” bugs, especially when translating types, encoding rules, and error semantics across stacks.

If you evaluate MiniMax M2.1, watch for three things. First, whether it keeps changes minimal and avoids rewriting unrelated code. Second, whether it respects existing architecture and conventions. Third, whether its “agentic” loop actually converges faster than a simpler approach using a smaller model plus deterministic tooling.

Bottom Line

MiniMax M2.1 is being positioned as a serious model for complex programming tasks, with explicit emphasis on multi-language programming, refactoring, and long-horizon agent workflows. Its published specs describe an MoE-style approach with 230B total parameters and 10B active per inference, and MiniMax has highlighted execution-oriented benchmark results such as VIBE. MiniMax API Docs+2Hugging Face+2

If those claims hold up in real repos, MiniMax M2.1 could be a strong option for teams that need cross-language competence and iterative workflows rather than one-shot code generation. The honest next step is not hype. It’s evaluation: run it against your actual backlog and measure whether it reduces cycle time without increasing defect risk.

Further Reading

MiniMax published its announcement post introducing MiniMax M2.1 as “significantly enhanced” for multi-language programming and “built for real-world complex tasks,” including links to access the API and related tooling: https://www.minimax.io/news/minimax-m21

MiniMax

MiniMax’s platform documentation lists MiniMax-M2.1 model details and positioning, including parameter and activation notes and emphasis on code generation and refactoring: https://platform.minimax.io/

MiniMax

MiniMax’s “M2.1 for AI Coding Tools” guide outlines how the company expects developers to use MiniMax M2.1 in coding tool workflows and API access patterns: https://platform.minimax.io/docs/guides/text-ai-coding-tools

MiniMax

TechRepublic reported on MiniMax M2.1’s release and summarized the company’s VIBE benchmark claims, including the reported aggregate score and subset performance for web and Android: https://www.techrepublic.com/article/news-minimax-m2-release/

TechRepublic

The Hugging Face model page for MiniMax-M2.1 provides a model-card style overview and repeats the reported VIBE aggregate and subset scores as part of its capability summary: https://huggingface.co/MiniMaxAI/MiniMax-M2.1

Hugging Face

MiniMax’s Mini-Agent GitHub repository provides a reference implementation and best-practice demo for building agents with MiniMax M2.1, including discussion of interleaved thinking and agent workflows: https://github.com/MiniMax-AI/Mini-Agent

GitHub

Connect with the Author

Curious about the inspiration behind The Unmaking of America or want to follow the latest news and insights from J.T. Mercer? Dive deeper and stay connected through the links below—then explore Vera2 for sharp, timely reporting.

About the Author

Discover more about J.T. Mercer’s background, writing journey, and the real-world events that inspired The Unmaking of America. Learn what drives the storytelling and how this trilogy came to life.

[Learn more about J.T. Mercer]

NRP Dispatch Blog

Stay informed with the NRP Dispatch blog, where you’ll find author updates, behind-the-scenes commentary, and thought-provoking articles on current events, democracy, and the writing process.

[Read the NRP Dispatch]

Vera2 — News & Analysis

Looking for the latest reporting, explainers, and investigative pieces? Visit Vera2, North River Publications’ news and analysis hub. Vera2 covers politics, civil society, global affairs, courts, technology, and more—curated with context and built for readers who want clarity over noise.

[Explore Vera2]

Whether you’re interested in the creative process, want to engage with fellow readers, or simply want the latest updates, these resources are the best way to stay in touch with the world of The Unmaking of America—and with the broader news ecosystem at Vera2.

Free Chapter

Begin reading The Unmaking of America today and experience a story that asks: What remains when the rules are gone, and who will stand up when it matters most? Join the Fall of America mailing list below to receive the first chapter of The Unmaking of America for free and stay connected for updates, bonus material, and author news.